|

» |

|

|

|

|

|

|

|

|

HP OpenVMS has always been known as a pioneering environment for high availability. This reputation dates back to the origins of OpenVMS more than 25 years ago. One of the most profound

innovations for OpenVMS was the development of clusters.

Qualified for up to 96 computer nodes and more than 3,000 processors, OpenVMS clusters afford virtually 100 percent uptime and expand the multiprocessing capabilities of the computing

environment.

HP OpenVMS Cluster software is an integral part of the OpenVMS operating system, providing the basis for many of the key capabilities utilized by OpenVMS enterprise solutions. A full "shared

everything" cluster design that has been in existence for more than 20 years, OpenVMS Cluster software allows for the maximum in expandability, scalability, and availability for mission-critical

applications.

A key enabler of the OpenVMS role in the Adaptive Enterprise, OpenVMS Cluster software has been an industry bellwether

technology for enterprise environments that must adapt to growth and change over time. These capabilities also make the total cost of ownership (TCO) for an OpenVMS application environment extremely

attractive.

With OpenVMS Version 8.4, we raise the bar further.

New benefits include:

- Lower Costs of deploying clusters: Use TCP/IP for all Cluster

Communication.

- Improved Scalability

- Improved Performance

Clusters over TCP/IP

Currently a multi-site OpenVMS cluster requires LAN bridging or Layer 2 services

Layer 2 services not only come at a cost but are also not provided in many

countries. In addition customers would need additional specialized resources in

terms of infrastructure and people to deploy a multi site disaster tolerant

cluster. This hinders the wide spread adoption for clusters. With OpenVMS

Version 8.4, cluster communication can happen over TCP/IP which is commonly used

worldwide . This makes it easier and cost effective for customers to deploy

multisite disaster tolerant clusters.

|

|

|

|

|

|

|

|

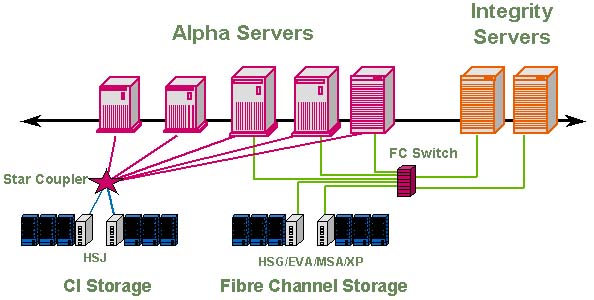

An HP OpenVMS Cluster is a highly integrated organization of VAX and HP AlphaServer system—or AlphaServer and HP Integrity server system—applications, operating systems, and storage

devices. These systems can be connected to each other and storage components in a variety of ways, depending on the needs of your business.

OpenVMS Cluster systems give you the ultimate in a highly available, scalable, and flexible computing environment. The cluster also allows you to connect systems of all sizes and capacities and

achieve an easy-to manage, single virtual system. And the inherent capabilities of OpenVMS clustering allow systems to be connected at distances from just a few inches up to 500 miles apart.

» What is an OpenVMS Cluster?

|

|

|

|

|

|

As OpenVMS moves to the Integrity server line, the role of clustering to allow customers a seamless integration into their environment cannot be understated. Due to its inherent mixed-architecture

clustering capability, OpenVMS clustering allows both AlphaServer systems and Integrity servers to be mixed within the same cluster environment.

At first release on the Integrity server line, OpenVMS mixed-architecture clusters can contain either VAX and AlphaServer systems, or AlphaServer systems and Integrity servers, in a fully

supported production environment. The inclusion of VAX systems in a mixed-architecture cluster containing Integrity servers is allowed only for the purposes of development and migration, but

it is not a supported configuration (meaning that if a problem arises because of the existence of the VAX system, the user may be advised to modify their configuration to one of the

previously mentioned supported pairs).

|

|

![[OpenVMS]](swoosh.jpg) |

|

|

|

Also, at first shipment of v8.2, the supported AlphaServer system and Integrity server cluster size will be limited to 16 total nodes (up to 8 AlphaServer systems and 8 Integrity servers). With

OpenVMS v8.2-1 on Integrity Servers, the node count limitation has been removed for clusters including Integrity server nodes. This means that these mixed-architecture clusters will adhere to the

overall maximum OpenVMS cluster size limit of 96 nodes, with no architecture-specific node count limits.

Fibre Channel SAN storage can be shared between the AlphaServer system and Integrity server architectures freely, since the on-disk file structure is identical between the two. Additionally,

applications can be run on either architecture within the cluster, allowing users throughout the cluster to use the applications as needed. With OpenVMS v8.2-1 on Integrity Servers, users will also

be able to have 2-node shared SCSI storage via the MSA30MI storage shelf (note that the 2 nodes must both be Integrity servers, as AlphaServers are not supported in this configuration).

For more information, refer to the following whitepaper, which describes how this invaluable flexibility can help you evolve your environment to Integrity servers.

» HP OpenVMS Clusters move to the Intel® Itanium® architecture.

|

|

|

|

|

|

OpenVMS Clusters utilize several key technologies to provide the capabilities OpenVMS customers rely on for their high-availability environments.

- Fibre Channel storage, within a shared SAN environment, provides the key storage connectivity to all members of the cluster environment. For the latest updates on the Fibre Channel storage

environment, visit: http://h71000.www7.hp.com/openvms/fibre/index.html

- Host-based Volume Shadowing (HBVS) is a key software-based mirroring capability that can provide multiple copies of a storage environment, allowing improvements in availability and performance

in clustered environments. For a detailed overview of this feature, visit:

http://h71000.www7.hp.com/openvms/products/volume-shadowing/index.html

- OpenVMS Galaxy is a feature that is utilized in many OpenVMS clustered environments. Within a system with more than one Galaxy partition, customers can use the shared-memory cluster

interconnect to connect these partitions as individual cluster members, and each member can be attached to other cluster nodes. For more information on Galaxy, visit:

http://h71000.www7.hp.com/availability/galaxy.html

- OpenVMS Clusters are a key component of the High Availability and Disaster Tolerance (HA/DT) service offerings from HP. For more information on HA/DT with OpenVMS, visit:

http://h71000.www7.hp.com/availability/index.html

- A key component of any OpenVMS Cluster environment is the ability to backup and restore key application data. OpenVMS provides a full array of data-protection offerings to allow flexible and

mission-critical management of key application data. For more information on these offerings, visit:

http://h71000.www7.hp.com/openvms/storage/enterprise.html

|

|

|